Qualcomm has officially entered the high-stakes artificial intelligence chip market, unveiling plans to release AI accelerator semiconductors that will directly compete with industry leaders Nvidia and AMD.

The announcement marks a strategic pivot for the wireless connectivity giant, traditionally known for mobile device processors rather than data center infrastructure.

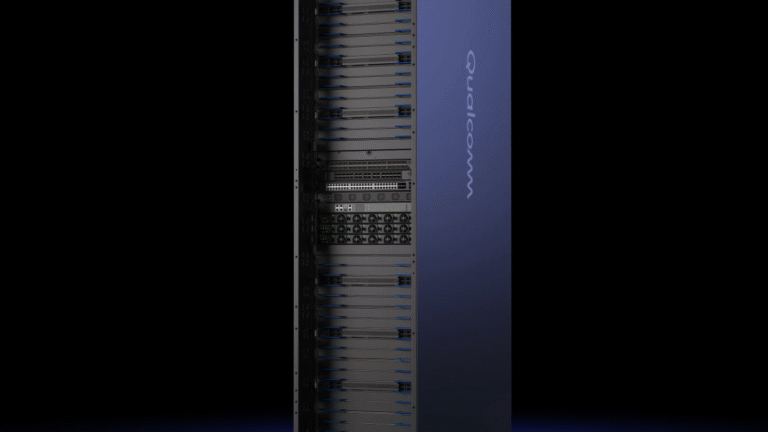

The company revealed two product lines during Monday’s announcement: the AI200, scheduled for commercial availability in 2026, and the AI250, planned for 2027 release.

Both chips are designed to operate in full, liquid-cooled server rack configurations, matching the deployment scale of competing solutions from Nvidia and AMD.

Leveraging Mobile Technology for Data Center Scale

Qualcomm’s approach draws directly from its smartphone technology portfolio, specifically its Hexagon neural processing units (NPUs) currently deployed in mobile devices.

This foundation represents years of AI development at the edge computing level, now scaled up for data center applications.

“We first wanted to prove ourselves in other domains, and once we built our strength over there, it was pretty easy for us to go up a notch into the data center level,” explained Durga Malladi, Qualcomm’s general manager for data center and edge.

The strategy demonstrates how mobile AI innovations can translate to enterprise-scale infrastructure.

Targeting the $6.7 Trillion Data Center Boom

Qualcomm’s market entry comes as the AI infrastructure sector experiences unprecedented growth, with McKinsey projecting nearly $6.7 trillion in capital expenditures on data centers through 2030.

The majority of this investment will flow toward systems built around AI chips, creating a massive opportunity for semiconductor manufacturers.

Currently, Nvidia dominates this landscape with over 90% market share, propelling the company to a market capitalization exceeding $4.5 trillion.

Nvidia’s GPUs powered the training of OpenAI’s GPT models, the large language models underlying ChatGPT, cementing its position as the industry standard.

Inference Over Training

Unlike Nvidia’s chips, which excel at both training and inference, Qualcomm is specifically targeting inference workloads, running pre-trained AI models rather than creating new ones.

This strategic positioning addresses a growing market segment as companies deploy AI applications at scale.

The chipmaker emphasizes cost advantages, claiming its rack-scale systems will offer lower operational expenses for cloud service providers.

Each rack consumes 160 kilowatts of power, comparable to high-performance Nvidia GPU configurations, suggesting competitive efficiency metrics.

Flexible Architecture for Diverse Customers

Qualcomm is adopting a modular sales approach, offering complete rack systems or individual components. Malladi noted that hyperscalers, massive cloud providers preferring custom designs, can purchase chips and parts separately to build proprietary configurations.

“What we have tried to do is make sure that our customers are in a position to either take all of it or say, ‘I’m going to mix and match,'” Malladi stated.

Interestingly, Qualcomm suggests even competitors like Nvidia or AMD could purchase certain components, such as its central processing units (CPUs), for their own systems.

Technical Advantages and Early Partnerships

Qualcomm touts several competitive advantages, including power consumption efficiency, total cost of ownership, and innovative memory architecture. The company’s AI cards support 768 gigabytes of memory, surpassing current offerings from Nvidia and AMD, potentially enabling more complex AI models.

The company has already secured significant commitments, including a May partnership with Saudi Arabia’s Humain to supply data centers with AI inferencing chips capable of deploying systems utilizing up to 200 megawatts of power.

However, Qualcomm declined to disclose specific pricing for chips, cards, or rack configurations, or the number of NPUs per rack.

Market Dynamics and Growing Competition

The timing reflects broader industry trends as AI companies seek alternatives to Nvidia’s dominant position.

Earlier this month, OpenAI announced plans to purchase chips from AMD, the second-largest GPU maker, and potentially acquire an equity stake in the company.

Tech giants including Google, Amazon, and Microsoft are simultaneously developing proprietary AI accelerators for their cloud services, intensifying competition.

Qualcomm’s entry adds another capable player to this rapidly evolving market, potentially accelerating innovation while providing customers with more choices for their AI infrastructure investments.